Recently, AMD held the Advancing AI Conference. In addition to releasing the brand-new Ruilong 8040 series AI PC chips, it finally announced the shipment of two major AI computing heavy new products, namely the MI300X GPU and the MI300A APU.

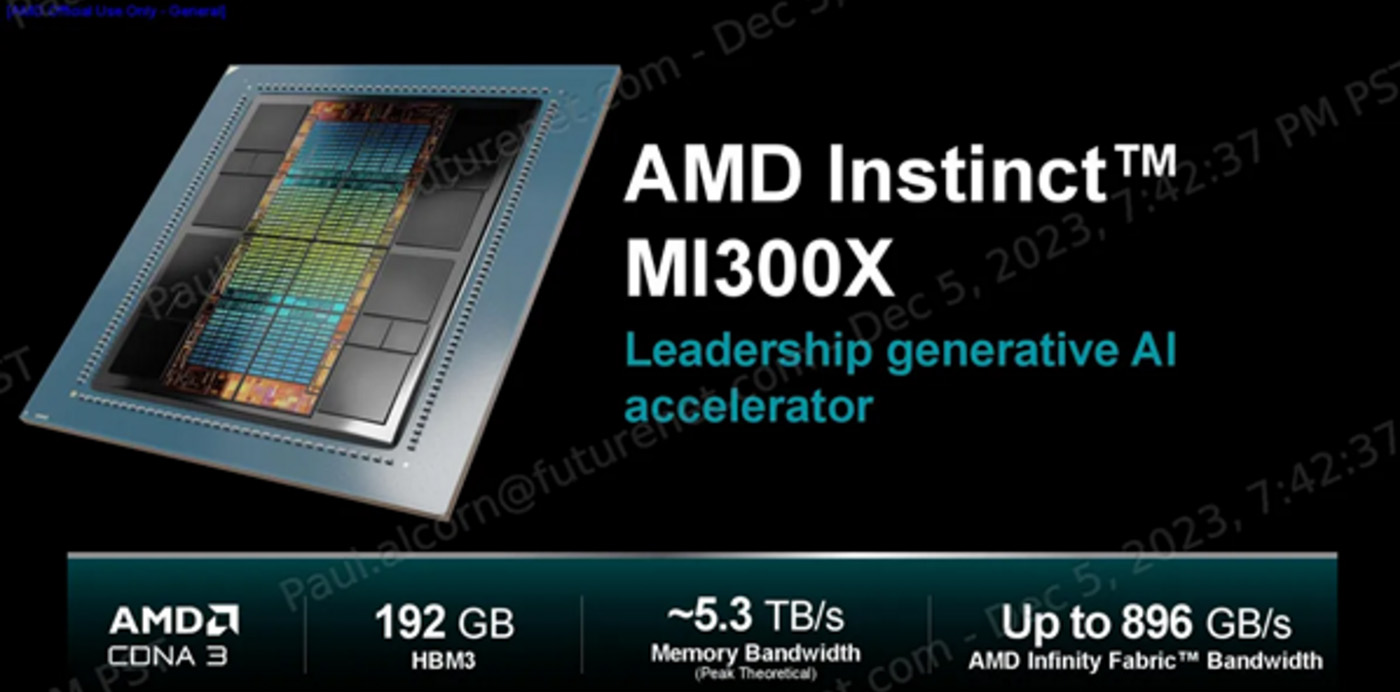

Top AI computing GPU MI300X

As an AI accelerator built by the new CDNA3 architecture, the configuration of MI300X can be described as luxurious, with 304 CDNA units in 8 groups of XCD, plus 192GB of video memory with a bandwidth of 5.3 TB/s. Compared with the previous generation MI250X, the number of computing units is nearly 40%, the memory capacity is increased to 1.5 times, and the theoretical bandwidth is increased to 1.7 times. At the same time, support for FP8 and sparse computing is introduced.

AMD is also compared with NVIDIA's H100 in practical use cases, and its throughput can reach 1.6 times of the latter when running BLOOM model reasoning with 176 billion parameters. And a single MI300X can run a large model with 70 billion parameters like Llama2, which simplifies the deployment of enterprise LLM and provides the ultimate TCO cost performance.

The first data center APU was released.

As NVIDIA began to introduce CPU+GPU packaging solutions such as Grace Hopper Superchip for data centers, AMD, a company that has done this for consumer products, naturally noticed the opportunity. So at this conference, AMD finally announced that the first data center APU, MI300A, began to ship.

With the support of 3D packaging technology and AMD Infinity architecture, MI300A integrates the GPU core of CDNA 3 architecture, the CPU core of Zen 4 architecture and 128GB of HBM3 memory. Similarly, compared with MI250X, its energy consumption ratio is nearly doubled in the FP32 calculation of HPC and AI loads.

Thanks to the unified memory and cache, the data transmission delay between CPU, GPU and HBM is greatly reduced, and all of them can enjoy huge bandwidth, which can provide better performance than the independent scheme in terms of extreme performance and power consumption distribution.

The software also ushered in a substantial upgrade.

At the Advancing AI conference, AMD not only updated its hardware, but also updated its software. RoCm, a parallel computing framework, ushered in the sixth edition, which mainly optimized AMD's Instinct series GPU in the large language model of generative AI.

As AMD's solution to NVIDIA's CUDA, ROCm has been gradually improved in recent years, and it can even be said that it is not inferior to CUDA in terms of development support. This update not only adds support for new data types, but also introduces advanced graphics and kernel optimization, library optimization and the most advanced attention mechanism algorithm. Taking the task of text generation as an example, compared with ROCm 5 running on MI250, the performance is improved significantly, and the overall delay is improved by about 8 times.

More importantly, this time OpenAI also mixed in, and OpenAI announced that they would add support for AMD Instinct on Triton 3.0. Triton is an open source programming language like Python, which allows developers to write efficient GPU code without CUDA development experience, and can be regarded as a simplified version of CUDA. In the latest Triton 3.0, it can be said that AMD's Instinct hardware platform has been supported out of the box.

In fact, this cooperation has long been heralded. Triton began to merge ROCm codes a few months ago, and previously announced the increase of AMD Instinct and Intel XPU support. However, at present, ROCm of Instinct platform still focuses on Linux development, while ROCm of Radeon platform mainly focuses on supporting Windows.

Write it at the end

This Advancing AI conference symbolizes AMD's ambition for the AI market next year. It is no wonder that AMD will increase the market size of AI chips in data centers from $30 billion in June to $45 billion this year. The future MI300X is likely to become the strongest competitor of Nvidia H100, and it may also become a new computing card that cloud service vendors are competing to chase.